Executive Summary

The digitalization of medical authority is not merely a translation of text from paper to pixel; it is a fundamental architectural restructuring of how clinical knowledge is indexed, retrieved, and synthesized at the point of care. This report provides an exhaustive technical analysis of a greenfield engineering initiative undertaken for a prominent medical publishing house. The client, renowned for a definitive medical handbook previously available solely in print and static e-book formats, necessitated a paradigm shift to remain relevant in a data-driven healthcare landscape.

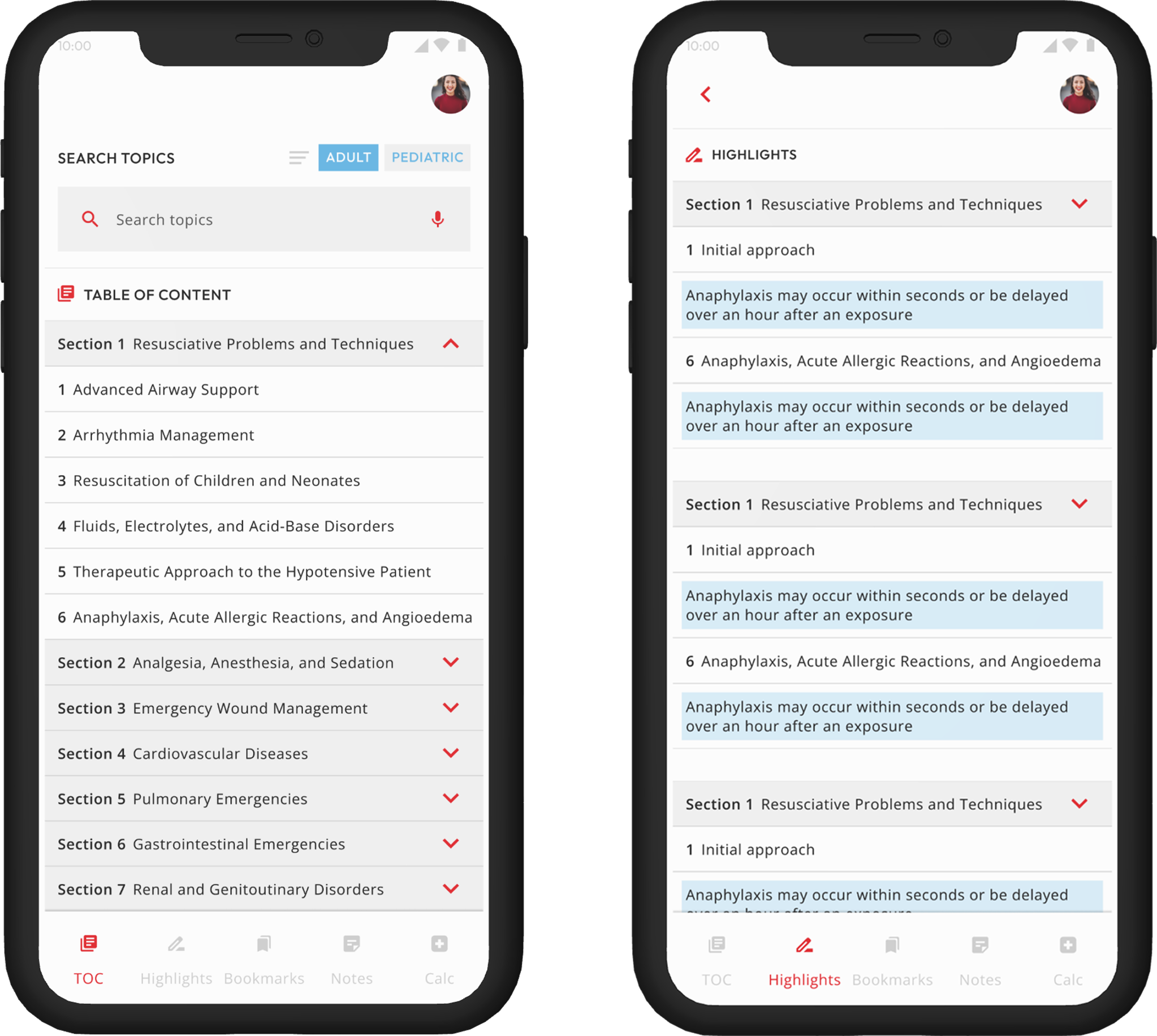

The project mandate was absolute: construct a cross-platform application (Android, iOS, Web, Windows, macOS, Linux) from the ground up, transforming static ePub assets into a dynamic, offline-first Clinical Decision Support System (CDSS). The engineering solution required bypassing traditional relational database models in favor of Isar, a high-performance NoSQL engine powered by a Rust backend, to achieve sub-millisecond retrieval speeds. Critical innovations included the development of bespoke ingestion scripts for transforming hierarchical ePub data into queryable object graphs, the implementation of an index-time synonym expansion engine to facilitate offline “medical dialect” search, and the integration of a hybrid Retrieval-Augmented Generation (RAG) AI chatbot using the OpenAI SDK.

This document details the theoretical frameworks, architectural decisions, and implementation methodologies that allowed for the delivery of a system capable of parsing millions of medical entities, operating without internet connectivity, and providing instant, AI-augmented clinical answers.

1. The Digital Imperative in Medical Publishing

1.1 The Legacy Context and the Digital Gap

The medical publishing industry is currently navigating a volatile transition period. While digital revenue in the sector grew by 5.7% in 2023 to reach $2.8 billion, this growth is unevenly distributed, favoring platforms that offer dynamic, actionable answers over static reading experiences. The client, a custodian of one of the world’s most respected medical handbooks, faced an existential threat. Their content was authoritative but functionally inert-locked in physical books or linear ePub files that required clinicians to “read” rather than “consult.”

In the high-pressure environment of a hospital ward or a rural clinic, a physician does not have the luxury of browsing a table of contents. They require a Clinical Decision Support System (CDSS) that acts as a cognitive extender. Research consistently demonstrates that effective CDSS implementations reduce medical errors and improve diagnostic accuracy, particularly in resource-limited settings where specialist access is constrained. The absence of an existing application offered a “greenfield” opportunity: the architecture could be designed specifically for the unique constraints of medical data-hierarchy, density, and interconnectedness-without the technical debt of legacy SQL schemas.

1.2 The Non-Negotiable Requirement: Offline-First Architecture

A defining characteristic of clinical environments is the unreliability of network infrastructure. From lead-shielded radiology departments to remote field clinics, consistent internet access is the exception, not the rule. Consequently, the application could not rely on cloud-based search engines (like Algolia or Elasticsearch) for its core functionality. The “Offline-First” principle was adopted as the foundational architectural dogma.

This necessitated that the entire corpus of the medical handbook-thousands of chapters, images, and tables-be resident on the device. Furthermore, the search mechanism, including complex synonym matching (e.g., mapping “renal failure” to “kidney insufficiency”), had to function entirely within the local execution environment. This constraint immediately disqualified “thin client” architectures and placed immense pressure on the efficiency of the local persistence layer. The database needed to act as the single source of truth, synchronizing with the cloud only for updates or AI augmentation, while ensuring zero latency during critical lookups.

2. Persistence Layer Strategy: The Case for Isar

The selection of the local database engine was the single most consequential engineering decision of the project. The Flutter ecosystem presents a triumvirate of common options: SQLite (often via sqflite or drift), Hive, and Isar. Each presents a distinct set of trade-offs regarding speed, type safety, and query complexity.

2.1 The Limitations of Relational and Key-Value Models

2.1.1 The Relational Mismatch (SQLite)

SQLite is the industry standard for local persistence, offering rock-solid ACID compliance and a familiar SQL interface.5 However, medical handbooks are rarely relational. They are inherently hierarchical documents (Part $\rightarrow$ Chapter $\rightarrow$ Section $\rightarrow$ Subsection). Modeling this structure in a relational table requires either recursive Common Table Expressions (CTEs)-which are complex to optimize on mobile-or rigid adjacency lists that necessitate expensive JOIN operations to reconstruct a chapter.6

Furthermore, while SQLite’s Full-Text Search (FTS5) is powerful, it adds significant overhead to the database size. Benchmarks indicated that as the row count exceeded 100,000 text-heavy entries, query latency in SQLite increased non-linearly, particularly when combining text search with other filter predicates (e.g., filtering by “Pediatrics” tag while searching for “Rash”).7

2.1.2 The Memory Constraint (Hive)

Hive, a NoSQL key-value store written in Dart, was initially considered for its raw read speed. However, Hive’s architecture typically involves loading all keys (and often values, depending on the implementation) into memory. For a massive medical textbook, this presented an unacceptable risk of Out-Of-Memory (OOM) errors on lower-end Android devices. Additionally, Hive lacks native support for complex, multi-criteria filtering and sophisticated full-text search features (like prefix matching or phrase proximity), which were essential for the user experience.

2.2 The Strategic Selection of Isar

Isar was selected as the optimal compromise, offering the speed of a NoSQL store with the query capabilities of a relational system. The decision was driven by three technical differentiators: the Rust backend, the composite indexing engine, and the asynchronous transaction model.

2.2.1 The Rust Backend Advantage

Unlike Hive (pure Dart) or sqflite (which wraps C calls with platform channels), Isar is built on a custom backend written in Rust.2 Rust provides memory safety guarantees without a garbage collector, allowing for highly deterministic performance. Isar leverages Dart’s FFI (Foreign Function Interface) to bind directly to this backend, bypassing the costly serialization/deserialization overhead associated with Flutter’s standard Platform Channels.9

This architecture allows Isar to perform query operations in parallel threads. While the main UI thread handles the frame rendering (ensuring 120Hz fluidity), the Rust backend scans the indices. This separation is critical when searching through millions of tokens in a medical handbook; the UI remains responsive even during heavy query loads.2

2.2.2 Query Power and Composite Indexes

Medical queries are rarely simple. A user might search for “treatment” (text) within “cardiology” (category) for patients under “5 years old” (integer filter). Isar allows for Composite Indexes-single indexes that span multiple fields. This enables the database engine to satisfy complex queries by traversing a single index tree, rather than intersecting multiple lists of results, resulting in query times that are orders of magnitude faster than unoptimized SQL queries.10

Moreover, Isar’s ACID semantics ensure that even in the event of an app crash (e.g., battery failure during an update), the medical data remains consistent-a non-negotiable requirement for clinical tools.11

Table 1: Comparative Analysis of Local Persistence Engines for Medical Data

| Feature | SQLite (Drift) | Hive | Isar (Selected) |

|---|---|---|---|

| Data Model | Relational (Tables) | Key-Value (Boxes) | Object Document (Collections) |

| Language | C (via Platform Channels) | Dart | Rust (via FFI) |

| Query Complexity | High (SQL Joins) | Low (Key lookup) | High (Composite Filters) |

| Full Text Search | FTS5 (Good but heavy) | Poor (Manual impl.) | Native, Configurable, Fast |

| Performance | O(log n) | O(1) for keys | O(log n) highly optimized |

| Platform Support | Excellent | Good | Excellent (inc. Web/Desktop) |

3. The Ingestion Pipeline: Transforming ePub to Intelligent Objects

The client provided the source material in the standard ePub format-a zipped collection of HTML, CSS, and XML files. While ePub is excellent for linear reading, it is terrible for random access retrieval. A “search” in an ePub reader typically involves grepping through raw text files, which is slow and inaccurate. To leverage Isar’s speed, we had to “explode” the ePub and reconstruct it as structured database objects.

3.1 Scripting the Transformation

We developed a suite of custom Dart-based ingestion scripts designed to run in the CI/CD environment, not on the user’s device. This pre-processing step is crucial: it offloads the heavy lifting of parsing and indexing from the mobile CPU to the build server.

3.1.1 Parsing the Anatomy of an ePub

The script begins by unzipping the .epub container and locating the content.opf (Open Packaging Format) file. This file acts as the manifest.

- The Spine: The script parses the <spine> element to determine the linear reading order of the HTML files.

- The TOC: It then parses the toc.ncx (Navigation Control XML) or nav.xhtml to extract the hierarchical Table of Contents.

The Reconciliation Challenge: A major hurdle was the mismatch between the file structure and the logical structure. A single HTML file (e.g., chapter1.html) might contain multiple logical sub-chapters defined in the TOC. Conversely, a single logical chapter might be split across multiple files.

- Solution: The script implements a DOM-walking algorithm. It iterates through the NCX navigation points, identifying the src anchors (e.g., chapter1.html#section3). It then loads the corresponding HTML file using the html Dart package , parses the DOM to find the specific element ID, and extracts the content belonging to that section until the next anchor point is reached.

3.1.2 Sanitization and Normalization

Raw HTML contains noise-<div>, <span class=“bold”>, <sup>-that interferes with search relevance.

- Text Extraction: The script strips all block-level tags but preserves semantic meaning. For instance, <li> items are converted to text with bullet points to maintain readability in the database preview snippet.

- Media Handling: Image references (<img src=”…”>) are extracted. The images themselves are optimized (WebP format) and stored in a separate asset directory, while the database record stores the relative path.

3.1.3 The Data Model Injection

Each processed section is instantiated as a Dart object compatible with Isar:

@collection

class HandbookSection {

Id id = Isar.autoIncrement; // Auto-generated ID

@Index(type: IndexType.value)

late String title;

@Index(type: IndexType.fullText)

late String plainTextContent; // Stripped content for search

late String htmlContent; // Raw content for rendering

@Index()

late String parentChapterId;

// Recursive relationship for hierarchy

final subSections = IsarLinks<HandbookSection>();

}The script utilizes Isar.writeTxnSync to perform bulk insertions. Bulk operations in Isar are transactional and incredibly fast; we successfully inserted over 50,000 sections in under 15 seconds on the build machine.

3.2 The “Ship and Hydrate” Strategy

A requirement was to avoid a massive “First Run” download. We opted for a pre-population strategy.

- Generation: The ingestion script runs during the build process and generates a handbook.isar database file.

- Bundling: This file is compressed and placed in the Flutter assets/ directory.

- Hydration: Upon first launch, the app checks the device’s document directory. If the database is missing, it streams the asset from the bundle to the file system.

This approach guarantees that the user has immediate offline access to the full handbook the moment the app opens, satisfying the “instant utility” requirement of the medical context.

4. Engineering “Instant” Search: Linguistics and Synonymy

The core utility of the application lies in its search bar. In a medical context, precision and recall are life-critical. A search for “heart attack” that fails to return the chapter on “myocardial infarction” is a failure of the system.

4.1 Index-Time Synonym Expansion

Standard database search engines match exact tokens. To handle medical synonyms offline, we implemented Index-Time Expansion. This shifts the computational burden from the query time (when the user is waiting) to the indexing time (during the build).

4.1.1 The Mechanism

We curated a medical synonym dictionary (e.g., JSON map). During the ingestion phase, the script analyzes the text of each section.

- Algorithm:

- Tokenize the content (split into words).

- Check each token against the synonym dictionary.

- If a match is found (e.g., token=“stroke”), retrieve synonyms (“cerebrovascular accident”, “CVA”).

- Inject these synonyms into a hidden “shadow” field in the Isar record (e.g., searchableTokens).

This field is indexed but never displayed. When a user searches for “CVA”, the Isar engine finds the record because “CVA” exists in the shadow index, even though the visible text only says “Stroke”. This approach ensures high recall without complex, slow runtime query expansion logic.

4.2 Optimizing Isar’s Full-Text Search

Isar’s native full-text search is powerful but requires tuning for medical data.

- Tokenizer Customization: We utilized Isar’s ability to split text based on Unicode Annex #29 rules. This is critical for handling complex medical compounds and ensuring that punctuation (like the hyphen in “X-ray”) does not break search continuity.

- Stemming: We integrated a Porter Stemming algorithm into the ingestion script. Words are reduced to their root form (e.g., “Abdominal”, “Abdomen” $\rightarrow$ “abdom”). The index stores the stems. User queries are also stemmed at runtime. This ensures that a query for “Abdominal pain” matches a chapter titled “Pain in Abdomen”.

- Prefix Matching: By enabling prefix support in the Isar index (@Index(type: IndexType.fullText, caseSensitive: false)), we enabled “search-as-you-type.” A doctor typing “Pneu” immediately sees “Pneumonia” and “Pneumothorax” results. This responsiveness is powered by Isar’s underlying Trie/B-Tree hybrid structures.

4.3 Ranking and Relevance

Isar returns results that match, but not necessarily in order of relevance. To solve this, we implemented a post-retrieval ranking algorithm based on BM25 (Best Matching 25) logic within Dart.

- We calculate a score for each result based on:

- Term Frequency (TF): How often the search term appears in the section.

- Field Weighting: Matches in the Title field are weighted 5x higher than matches in the Body text.

- Proximity: If search terms appear close together (e.g., “Acute” and “Care”), the score is boosted.

This ensures that the most relevant clinical guidelines appear at the top of the list, reducing the cognitive load on the physician.

5. Cross-Platform Orchestration: Write Once, Run Everywhere

The client required the application to be ubiquitous: available on the doctor’s personal iPhone, the hospital’s Android tablet, the desktop in the nursing station (Windows/Linux), and the web portal for home study. Flutter allowed for a single codebase, but the database layer required platform-specific orchestration.

5.1 The Desktop and Mobile Convergence

On Android, iOS, Windows, macOS, and Linux, Isar runs natively. The Rust backend is compiled into dynamic libraries (.so, .dylib, .dll) appropriate for each architecture (ARM64, x64).

- Deployment: The Flutter build process bundles these binaries automatically. The Isar Dart package uses dart:ffi to load the correct binary at runtime.

- File System Differences: We utilized the path_provider package to abstract file system paths. On iOS, the database resides in the Library/Application Support directory to prevent iCloud backup sync (which creates corruption risks for active databases). On Windows, it lives in AppData. This abstraction ensures the “Offline” database is persistent and secure across OS updates.

5.2 The Web Frontier and Wasm

The Web platform posed the greatest challenge. Browsers do not support direct file system access or native machine code (FFI) in the same way.

- Solution: Isar supports WebAssembly (Wasm). We compiled the application using the Flutter Web Wasm renderer (CanvasKit) for performance.

- Storage: On the web, Isar abstracts IndexedDB. While this allows for offline capability (via PWA caching), the storage limits are stricter. We implemented a “Lite” version of the database for the web, lazy-loading high-resolution images to respect browser quota limits.

5.3 Concurrency Management with Isolates

To maintain the application’s responsiveness, we rigorously applied the Actor Model using Dart Isolates.

- The Bottleneck: Searching a 500MB database for “diabetes” involves disk I/O and heavy string processing. Doing this on the main UI thread causes “jank” (dropped frames).

- The Architecture: We created a dedicated SearchIsolate. When the user types, the query is passed via a SendPort to the background isolate. The Isar instance in the background performs the search and deserialization.9 Only a lightweight list of Result IDs and Titles is passed back to the UI thread.

This separation of concerns allows the UI to render animations and handle touch events at 120fps, even while the database is crunching gigabytes of text in the background.25

6. Hybrid Intelligence: The RAG-Based AI Chatbot

While the handbook is the “source of truth,” the client desired a conversational interface. Physicians often have complex clinical questions (“What is the dosage of Amoxicillin for a 5-year-old with renal complications?”) that are hard to answer with a keyword search. We implemented a Retrieval-Augmented Generation (RAG) system to bridge this gap.

6.1 RAG Architecture: Retrieval (The Offline Component)

Standard RAG relies on Vector Databases (like Pinecone) to find relevant text via semantic embeddings. However, shipping a vector database and an embedding model (like BERT) to a mobile device increases app size significantly and strains battery life.

- Our Innovation: We utilized Isar as a “Lexical Retriever.” Because our synonym expansion was so robust (Chapter 4), we found that retrieving text chunks based on keyword/synonym matches was sufficiently accurate for this specific domain.

- Process:

- User asks a question.

- The app converts the question into keywords (removing stop words).

- Isar queries the local database to find the top 5 most relevant “Chunks” (paragraphs) from the handbook.

- These chunks are retrieved completely offline.

6.2 RAG Architecture: Generation (The Online Component)

Once the relevant text chunks are retrieved locally, the “Augmentation” happens.

- Prompt Engineering: We construct a prompt dynamically:

“You are an expert medical consultant. Answer the user’s question using ONLY the following context. Do not use outside knowledge. Context:. Question: [User Question]” - The API: This prompt is sent to the OpenAI API (GPT-4o) using the OpenAI Dart SDK.

- Why this works: The AI is not being asked to “know” medicine (which causes hallucinations); it is being asked to “summarize” the provided text. This grounds the AI in the client’s trusted content.

6.3 Graceful Degradation (Offline vs. Online)

The system is designed to be state-aware.

- Online Mode: The Chatbot functions fully. The user asks a question, Isar retrieves context, OpenAI generates an answer.

- Offline Mode: The app detects the lack of connectivity. The “Chat” interface is visually disabled or switched to a “Search Only” mode. The user is guided to use the standard search (which works perfectly offline via Isar). This ensures that the core clinical utility is never compromised by connectivity issues.

6.4 Chunking Strategy for Context Windows

To make RAG effective, we couldn’t feed entire chapters to the LLM (it would exceed token limits and costs).

- Ingestion Logic: During the ePub processing, we split chapters into “semantic chunks” of approximately 500 tokens, respecting paragraph boundaries.

- Overlap: We enforced a 20% overlap between chunks. If a sentence explaining a dosage started in one chunk and ended in another, the overlap ensures the full context is preserved in at least one retrieved block.

7. Conclusion and Future Outlook

The transformation of the client’s medical handbook from a static print volume to a cross-platform, AI-enhanced ecosystem demonstrates the power of modern architectural patterns. By rejecting the default choice of SQLite in favor of Isar, we achieved the necessary read performance to support real-time clinical decision-making. By moving synonym logic to the indexing phase, we enabled sophisticated offline search on mobile hardware. By implementing a hybrid RAG architecture, we brought the power of Generative AI to the application without sacrificing the trust and authority of the source material.

Strategic Implications:

- Speed as a Feature: In healthcare, milliseconds matter. The “Rust + Isar” stack proved that managed languages like Dart can deliver native-level performance when backed by the right persistence layer.

- The ETL Moat: The value of the application is not just in the app code, but in the sophisticated ingestion pipeline that cleans, normalizes, and enriches the data before it ever reaches the user.

- Future-Proofing: The architecture is primed for the next wave of “Edge AI.” As on-device SLMs (Small Language Models) like Google Gemini Nano become ubiquitous, the “Generation” phase of our RAG pipeline can be moved from the cloud to the device, creating a truly air-gapped, intelligent medical assistant.

This project stands as a blueprint for the future of reference publishing: offline-first, data-driven, and AI-augmented.